I identify as utilitarian—

—which is not to say that I believe in the weird sort of aggregative add the happiness scores

moral codes that prefer a society of a billion slaves and one obscenely happy person to today's world, but which is to say that I believe (approximately) that the highly complicated human notion(s) of good

obey(s) the von Neumann–Morgenstern axioms given sufficient computational resources and bias correction—

—and so I decided I may as well get around to explaining and playing around with the VNM axioms and a couple of little mathematical-philosophical thoughts surrounding them. And yes, this is hardly groundbreaking stuff never before seen on the Internet, but hey, it could be worse: I could be trying to explain what Haskell monads are.

What things have utility?

Let \(\mathcal{O}\) be the set of outcomes. These represent events like the cat lives

and the cat dies

, as well as more probabilistic events like 50% chance the cat lives

.

(Yes, probability.

As befits any paradigm that might end up being used in decision-making, we're acknowledging

uncertainty — whether that's the I can't predict tomorrow's weather

kind

or the quantum superposition kind

that foils even Laplace's demon.)

catexamples as stochastic vectors: \[ \left(\begin{array}{c} 1 \\ 0 \end{array}\right), \left(\begin{array}{c} 0 \\ 1 \end{array}\right), \left(\begin{array}{c} \tfrac{1}{2} \\ \tfrac{1}{2} \end{array}\right) \]

Stochastic vectors (column vectors with non-negative entries summing to 1) are a way of denoting one kind of simple, finite probability distribution, but these could just as easily be continuous functions or any other representation.

Compare and contrast to wave functions, which are \(l^2\) unit vectors in complex Euclidean space.

(For our resulting analysis it seems to me that any convex subset of a vector space would do. In particular, for any two outcomes \(o_1,o_2 \in \mathcal{O}\) and any \(0 < \alpha < 1\), we need \(\alpha o_1 + (1-\alpha) o_2 \in \mathcal{O}\), i.e. outcomes are closed under probabilistic combination, i.e. weighted averaging

. However, for sensibility of interpretation, we also probably want all the extreme points to be linearly independent.)

It's important to note that outcomes are in fact very, very complicated things. Hence I should explicitly state this preliminary axiom

about our choice of outcome space:

Axiom 0 (outcome consequentialism): \(\mathcal{O}\) is defined broadly enough to contain all information about the moral goodness/badness of an outcome.

Example: Say that we believe that flipping a coin to decide whether a cat lives or dies is evil. Then we must define an \(\mathcal{O}\) such that any outcome vector contains information about whether any coins were flipped.

Our outcome space might then be the probabilistic combinations of cat lives after coin flip

, cat dies after coin flip

, cat lives after no coin flip

, and cat dies after no coin flip

.

The von Neumann–Morgenstern axioms

Time to start incorporating moral judgements into our model!

Definition (preference): For any two \(a, b \in \mathcal{O}\), we say \(a \prec b\) when \(a\) is worse than

\(b\), where worse

, better

and just as good/bad as

are taken according to... whatever definition we're unpacking. (We're developing a framework here, not a One True Moral Code.)

Axiom 1 (completeness): for every pair of outcomes \(a, b \in \mathcal{O}\), exactly one of the following holds: \(a \prec b\), \(a = b\), or \(a \succ b\).

Axiom 2 (transitivity): for all \(a, b, c \in \mathcal{O}\), if \(a \preceq b\) and \(b \preceq c\), then \(a \preceq c\).

These first two axioms are both common-sense enough, and that's intentional — utilitarianism is meant to formalise existing moral intuitions, not subvert them.

If your response to a question of the form would you prefer \(a\) to \(b\)?

is It depends...

, then you haven't been given enough information (i.e. Axiom 0 is broken). It's not unlike someone asking you Are even numbers bigger than odd numbers?

.

(This is important to stress. If VNM-style utility suffers from a pragmatic problem, it's overcomplication, not oversimplicity.)

Lemma: \((\mathcal{O}, \prec)\) is a total order.

The remaining two axioms are perhaps a little more controversial:

Axiom 3 (continuity): for all \(a, b, c \in \mathcal{O}\) such that \(a \prec b \prec c\), there exists some \(0 < \alpha < 1\) such that \(\alpha a + (1-\alpha) c = b\).

Example: Say that I believe that nothing happening

\(\prec\) winning $5000

\(\prec\) winning $10000

.

Then according to the axiom of continuity, I should also consider \(X\) chance of winning $10000

to be just as good as winning $5000

, for some probability \(X\). (Note that this probability isn't necessarily 50%, though!)

If I was being threatened by debt collectors who wanted exactly $10K in the next five minutes, I'd probably set \(X\) fairly low — a solid $5K would not mean nearly as much to me as a long shot at getting the money I needed to save my life.

On the other hand, if I was being threatened by debt collectors who wanted exactly $3 in the next five minutes, I'd probably set \(X\) fairly high.

Axiom 4 (independence): for all \(a, b, c \in \mathcal{O}\) such that \(a \prec b\), and for all \(0 < \alpha < 1\), \(\alpha a + (1-\alpha) c \prec \alpha b + (1-\alpha) c\).

Example: Say that you prefer free chocolate ice cream

to free vanilla ice cream

.

Then according to the axiom of independence, you prefer 50% chance of death, 50% chance of free chocolate ice cream

to 50% chance of death, 50% chance of free vanilla ice cream

.

Furthermore, you prefer 50% chance of free TARDIS ride, 50% chance of free chocolate ice cream

to 50% chance of free TARDIS ride, 50% chance of free vanilla ice cream

.

This example neatly shows the intuition behind the axiom of independence. Compare and contrast to independence of irrelevant alternatives in decision/voting theory (e.g. Arrow's theorem).

Example: Say that you prefer nothing happens

to 2% chance of death, 98% chance of winning $100

.

Then according to the axiom of independence, you prefer 50% chance of death, 50% chance of nothing happening

to 51% chance of death, 49% chance of winning $100

.

This second example illustrates how the axiom of independence doesn't always mesh well with our intuitions of probability. Many people would consider a 2% risk of death to not nearly be worth a hundred dollars, but would prefer having the money thrown in in the latter case.

I could argue it's fairly natural to compare probabilities in terms of ratios — a 2% risk of death is infinitely worse than a 0% risk, whereas 51% and 50% feel like practically the same thing. That said, this doesn't mean that ratios are the correct way to compare probabilities:

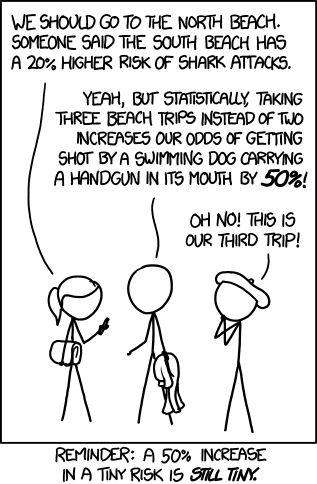

A 50% increase in a tiny risk is still tiny.

Credit: Increased Risk

, xkcd.

However, just as with Tversky and Kahneman's seminal save 200 lives

/400 people die

study into how framing affects preferences, reframing the 50/50 vs. 51/49

choice into an equivalent but different-feeling choice can cause intuition to pick the other option.

Thinking of the second set of choices as a 50% brush of death, followed by — if you're alive — one of the first two choices

, makes the difference in death-probabilities seem significant again (at least to me). Determining which frame is the correct

frame to make a preference decision with is... non-trivial, to say the least.

(When I said earlier that I believed human notion(s) of good

obeyed the VNM axioms given sufficient computational resources and bias correction

, one major part of the computational difficulty I was referring to was the amount of analysis required so resolve one's conflicting intuitions in cases like this.)

Utility

Theorem (von Neumann–Morgenstern utility theorem): Assuming Axioms 1-4 hold, then there exists some [not necessarily unique] linear function \(u: \mathcal{O} \rightarrow \mathbb{R}\) such that: \[o_1 \prec o_2 \Leftrightarrow u(o_1) < u(o_2)\]

(Okay, in all honesty, I used linear

to mean preserving weighted averages

... y'know, \(u(\alpha o_1 + (1-\alpha) o_2) = \alpha u(o_1) + (1-\alpha) u(o_2)\)... but the word just seemed snappy and close-enough, you know?)

Proof (sketch): Assume there are at least two distinct events \(a \prec b\) (and if not, pick any constant function). Set \(u(a) = 0\) and \(u(b) = 1\), and pick all remaining values in a way consistent with Axiom 3.

Proving that our construction of \(u\) is weighted-average linear

and preserves preference ordering is slightly more involved, and relies on all four axioms. (It's easy enough to do by induction in the case where the basis vectors

of \(\mathcal{O}\) are finite.)

Notice we didn't say anything about the uniqueness of the function, and that's because these utility functions

aren't unique.

Example: Say that Popopo is presented with three outcomes: vanilla ice cream

, chocolate ice cream

, and no ice cream

. His preferences obey the VNM axioms, and it happens that he thinks vanilla ice cream

is just as good as 80% of chocolate ice cream, 20% of no ice cream

.

Then one possible utility function for Popopo is: \[ \begin{align} u(\textrm{none}) &= 0 \\ u(\textrm{chocolate}) &= 1 \\ u(\textrm{vanilla}) &= 0.8 \end{align} \]

However, this is not the only possible utility function that matches up with his preferences! Here's another one: \[ \begin{align} u'(\textrm{none}) &= 17 \\ u'(\textrm{chocolate}) &= 32 \\ u'(\textrm{vanilla}) &= 29 \end{align} \]

If Popopo has something we could describe as a unique, canonical

utility function, it's certainly not a function from \(\mathcal{O} \rightarrow \mathbb{R}\).

In a future post (fingers crossed), I'll talk a little bit more about equivalence between utility functions, and attempt to translate meaningful statements about utility functions into the parlance of programming (specifically type systems).

No comments:

Post a Comment